caffe 源码学习笔记(3) Net

Net 基本介绍

网络通过组成和自微分共同定义一个函数及其梯度。

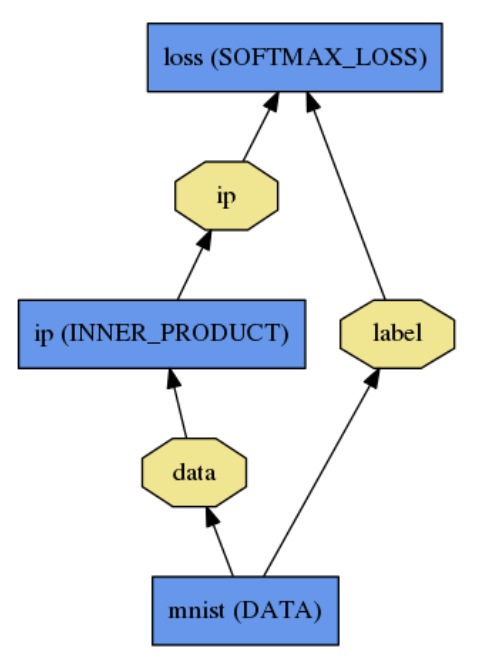

网络是一些Layer组成的DAG,也就是有向无环图,在caffe中通常由prototxt定义.

比如

1name: "LogReg"

2layer {

3 name: "mnist"

4 type: "Data"

5 top: "data"

6 top: "label"

7 data_param {

8 source: "input_leveldb"

9 batch_size: 64

10 }

11}

12layer {

13 name: "ip"

14 type: "InnerProduct"

15 bottom: "data"

16 top: "ip"

17 inner_product_param {

18 num_output: 2

19 }

20}

21layer {

22 name: "loss"

23 type: "SoftmaxWithLoss"

24 bottom: "ip"

25 bottom: "label"

26 top: "loss"

27}

定义了

值得强调的是,caffe中网络结构的定义与实现是无关的,这点比pytorch之类的深度学习框架不知高到哪里去了,也是caffe作为部署端的事实标准的重要原因.

Net 实现细节

我们先看下NetParameter的proto

1

2 message NetParameter {

3 optional string name = 1; // consider giving the network a name

4 // DEPRECATED. See InputParameter. The input blobs to the network.

5 repeated string input = 3;

6 // DEPRECATED. See InputParameter. The shape of the input blobs.

7 repeated BlobShape input_shape = 8;

8

9 // 4D input dimensions -- deprecated. Use "input_shape" instead.

10 // If specified, for each input blob there should be four

11 // values specifying the num, channels, height and width of the input blob.

12 // Thus, there should be a total of (4 * #input) numbers.

13 repeated int32 input_dim = 4;

14

15 // Whether the network will force every layer to carry out backward operation.

16 // If set False, then whether to carry out backward is determined

17 // automatically according to the net structure and learning rates.

18 optional bool force_backward = 5 [default = false];

19 // The current "state" of the network, including the phase, level, and stage.

20 // Some layers may be included/excluded depending on this state and the states

21 // specified in the layers' include and exclude fields.

22 optional NetState state = 6;

23

24 // Print debugging information about results while running Net::Forward,

25 // Net::Backward, and Net::Update.

26 optional bool debug_info = 7 [default = false];

27

28 // The layers that make up the net. Each of their configurations, including

29 // connectivity and behavior, is specified as a LayerParameter.

30 repeated LayerParameter layer = 100; // ID 100 so layers are printed last.

31

32 // DEPRECATED: use 'layer' instead.

33 repeated V1LayerParameter layers = 2;

34 }

都很好理解,除了NetState不知道是个什么东西...

继续往下看,看到和NetState有关的两个proto定义如下:

1

2message NetState {

3 optional Phase phase = 1 [default = TEST];

4 optional int32 level = 2 [default = 0];

5 repeated string stage = 3;

6}

7

8message NetStateRule {

9 // Set phase to require the NetState have a particular phase (TRAIN or TEST)

10 // to meet this rule.

11 optional Phase phase = 1;

12

13 // Set the minimum and/or maximum levels in which the layer should be used.

14 // Leave undefined to meet the rule regardless of level.

15 optional int32 min_level = 2;

16 optional int32 max_level = 3;

17

18 // Customizable sets of stages to include or exclude.

19 // The net must have ALL of the specified stages and NONE of the specified

20 // "not_stage"s to meet the rule.

21 // (Use multiple NetStateRules to specify conjunctions of stages.)

22 repeated string stage = 4;

23 repeated string not_stage = 5;

24}

参考 [Discussion] How to specify all-in-one networks #3864 得知,这些参数的主要目的是为了解决部署和训练时,需要多个prototxt的问题.

实际上,部署和训练的protoxt可能大部分都是一样的,只不过是输入,输出以及少部分layer有些差别.如果分为多个文件,会带来的问题就是,如果要修改一些共同的layer,就需要修改两次.

于是可以通过这些参数来控制网络结构,使得部署和训练使用相同的prototxt ,得到一个"all-in-one network"

接下来值得看的是网络的构建部分.

对于一个Net,会顺序构建每个Layer

对于每个Layer,大致分为AppendTop,AppendBottom,AppendParam 三部分.我们重点关注前两部分.

1

2// Helper for Net::Init: add a new bottom blob to the net.

3template <typename Dtype>

4int Net<Dtype>::AppendBottom(const NetParameter& param, const int layer_id,

5 const int bottom_id, set<string>* available_blobs,

6 map<string, int>* blob_name_to_idx) {

7 const LayerParameter& layer_param = param.layer(layer_id);

8 const string& blob_name = layer_param.bottom(bottom_id);

9 if (available_blobs->find(blob_name) == available_blobs->end()) {

10 LOG(FATAL) << "Unknown bottom blob '" << blob_name << "' (layer '"

11 << layer_param.name() << "', bottom index " << bottom_id << ")";

12 }

13 const int blob_id = (*blob_name_to_idx)[blob_name];

14 LOG_IF(INFO, Caffe::root_solver())

15 << layer_names_[layer_id] << " <- " << blob_name;

16 bottom_vecs_[layer_id].push_back(blobs_[blob_id].get());

17 bottom_id_vecs_[layer_id].push_back(blob_id);

18 // ??? 怎么就erase了,一个top后面可以接多个layer的啊

19 available_blobs->erase(blob_name);

20 bool need_backward = blob_need_backward_[blob_id];

21 // Check if the backpropagation on bottom_id should be skipped

22 if (layer_param.propagate_down_size() > 0) {

23 need_backward = layer_param.propagate_down(bottom_id);

24 }

25 bottom_need_backward_[layer_id].push_back(need_backward);

26 return blob_id;

27}

1

2// Helper for Net::Init: add a new top blob to the net.

3template <typename Dtype>

4void Net<Dtype>::AppendTop(const NetParameter& param, const int layer_id,

5 const int top_id, set<string>* available_blobs,

6 map<string, int>* blob_name_to_idx) {

7 shared_ptr<LayerParameter> layer_param(

8 new LayerParameter(param.layer(layer_id)));

9 const string& blob_name = (layer_param->top_size() > top_id) ?

10 layer_param->top(top_id) : "(automatic)";

11 // Check if we are doing in-place computation

12 if (blob_name_to_idx && layer_param->bottom_size() > top_id &&

13 blob_name == layer_param->bottom(top_id)) {

14 // In-place computation

15 LOG_IF(INFO, Caffe::root_solver())

16 << layer_param->name() << " -> " << blob_name << " (in-place)";

17 top_vecs_[layer_id].push_back(blobs_[(*blob_name_to_idx)[blob_name]].get());

18 top_id_vecs_[layer_id].push_back((*blob_name_to_idx)[blob_name]);

19 } else if (blob_name_to_idx &&

20 blob_name_to_idx->find(blob_name) != blob_name_to_idx->end()) {

21 // If we are not doing in-place computation but have duplicated blobs,

22 // raise an error.

23 LOG(FATAL) << "Top blob '" << blob_name

24 << "' produced by multiple sources.";

25 } else {

26 // Normal output.

27 if (Caffe::root_solver()) {

28 LOG(INFO) << layer_param->name() << " -> " << blob_name;

29 }

30 shared_ptr<Blob<Dtype> > blob_pointer(new Blob<Dtype>());

31 const int blob_id = blobs_.size();

32 // 先取blob_id,再push_back 说明blob_id是从0开始递增的整数.

33 // blob_id都是在appendTop时定义的

34 blobs_.push_back(blob_pointer);

35 blob_names_.push_back(blob_name);

36 blob_need_backward_.push_back(false);

37 if (blob_name_to_idx) { (*blob_name_to_idx)[blob_name] = blob_id; }

38 top_id_vecs_[layer_id].push_back(blob_id);

39 top_vecs_[layer_id].push_back(blob_pointer.get());

40 }

41 // 维护一个出现过的top blob集合,目的是判断后面所接layer的bottom blob名称的合法性

42 // 这里要判断 available_blobs 是否为NULL是因为,可能构造匿名的top blob,此时available_blobs

43 // 和 blob_name_to_idx 都会传NULL 值

44 if (available_blobs) { available_blobs->insert(blob_name); }

45}

代码比较好懂,解析部分见注释... 唯一值得注意的地方是available_blobs这部分的维护.在AppendBottom中

1

2 // ??? 怎么就erase了,一个top后面可以接多个layer的啊

3 available_blobs->erase(blob_name);

每次处理完一个bottom就从available_blobs 去掉.

然而,一个top blob明明是可以输入给多个layer的... ????? 不明白

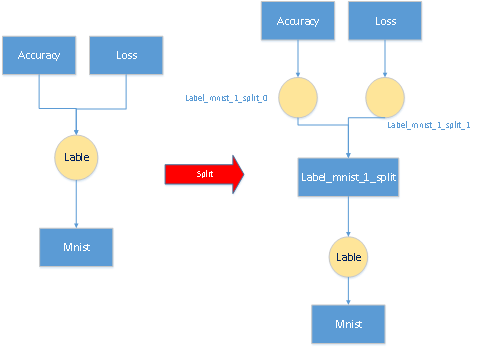

查阅资料看到 What does InsertSplit() / SplitLayer do? #767

原来

// Create split layer for any input blobs used by other layers as bottom // blobs more than once.

对于被多个layer用作bottom的top blob,做了分身...

可以参考Net的网络层的构建(源码分析)

Posts in this Series

- caffe 源码阅读笔记

- [施工中]caffe 源码学习笔记(11) softmax

- caffe 源码学习笔记(11) argmax layer

- caffe 源码学习笔记(10) eltwise layer

- caffe 源码学习笔记(9) reduce layer

- caffe 源码学习笔记(8) loss function

- caffe 源码学习笔记(7) slice layer

- caffe 源码学习笔记(6) reshape layer

- caffe 源码学习笔记(5) 卷积

- caffe 源码学习笔记(4) 激活函数

- caffe 源码学习笔记(3) Net

- caffe 源码学习笔记(2) Layer

- caffe 源码学习笔记(1) Blob