(CSE 599W)Reverse Mode Autodiff

背景

怎么算微分。。通常有三种方法。

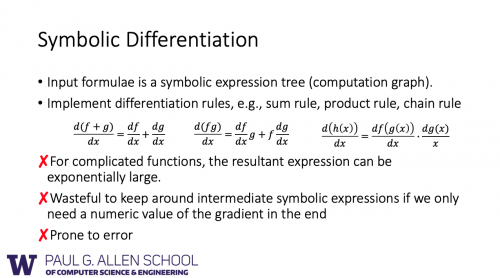

- Symbolic Differentiation

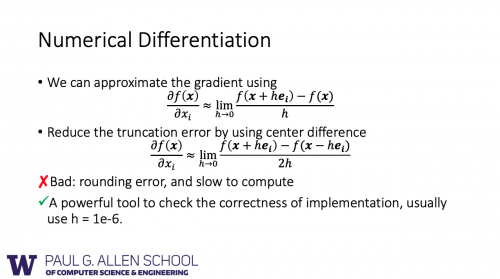

- Numerical Differentiation

- Automatic Differentiation (auto diff)

auto diff中两种主流的方式分别是forward-mode和reverse-mode 由于forward-mode的方法中,计算的时间复杂度是O(n),n是输入的参数个数。而reverse-mode中,计算的时间复杂度是O(m),m是输出节点的个数。在dnn中,n往往很大,远大于m,因此这里主要介绍reverse-mode auto diff方法。

backprop和reverse mode auto diff的区别

看了reverse mode auto diff的过程,感觉和backprop是一回事呀。。。 实际上,backprop指的是训练神经网络根据loss的gradient来更新weight的过程,而auto diff是backprop使用的一个用来计算gradient的 technique.

Bakpropagation refers to the whole process of training an artificial neural network using multiple backpropagation steps, each of which computes gradients and uses them to perform a Gradient Descent step. In contrast, reverse-mode auto diff is simply a technique used to compute gradients efficiently and it happens to be used by backpropagation.

chain rule

chain rule..也就是微积分里的链式法则 更准确的说,是多变量的chain rule 简单来说,就是同一条路径相乘,不同路径相加 MULTI-VARIABLE CHAIN RULE

可以参考 MULTI-VARIABLE CHAIN RULE

实现

放一个CSE 599W Systems for ML 课程的assigment 1,比较简单,只有add,mul,matmul这几个算子。(因为重点并不在于支持算子的种类) 需要实现几个算子的forward和梯度的计算。。这部分比较好写。。 然后需要实现executor的run函数。。做个拓扑排序然后计算即可。。

1 def run(self, feed_dict):

2 """Computes values of nodes in eval_node_list given computation graph.

3 Parameters

4 ----------

5 feed_dict: list of variable nodes whose values are supplied by user.

6

7 Returns

8 -------

9 A list of values for nodes in eval_node_list.

10 """

11 node_to_val_map = dict(feed_dict)

12 print("self.eval_node_list={}".format(self.eval_node_list))

13 # Traverse graph in topological sort order and compute values for all nodes.

14 topo_order = find_topo_sort(self.eval_node_list)

15 # 按照拓扑排序的顺序来计算,保证计算当前节点的值时,其依赖的值都计算出来了。

16 """TODO: Your code here"""

17 for node in topo_order:

18 if isinstance(node.op, PlaceholderOp):

19 continue

20 # 在实际计算的时候,要用具体的值来替代node

21 input_vals = [node_to_val_map[x] for x in node.inputs]

22 res = node.op.compute(node,input_vals)

23 node_to_val_map[node] = res

24

25 # Collect node values.

26 # 因为是按照topo order计算的,最后再变为和输入相同的顺序去输出

27 node_val_results = [node_to_val_map[node] for node in self.eval_node_list]

28 return node_val_results

29

然后是计算梯度这部分 需要注意 node_to_output_grads_list,是一个dict, key是node,val其实是一个list,表示node对哪些后续节点的gradient有作用.

然后input_grads,表示的是node的input节点相对node的gradient

听起来有些让人费解。。看个具体的例子

对于下面这个计算图,相关变量的值如下

1def test_multi_var_chain_rule():

2 x1 = ad.Variable(name="x1")

3 x2 = x1+3

4 x3 = x1+5

5 y = x2*x3

6

7 grad_x1, grad_x2, grad_x3 = ad.gradients(y, [x1, x2, x3])

8

9 executor = ad.Executor([y, grad_x1, grad_x2, grad_x3])

10 x1_val = 1 * np.ones(3)

11 y_val, grad_x1_val, grad_x2_val, grad_x3_val = executor.run(feed_dict = {x1 : x1_val})

12

1

2nosetests -s -v autodiff_test.py 130 ↵

3autodiff_test.custom_test ... output_node=(x1+((x2*x3)*x1))

4node.name=(x1+((x2*x3)*x1))

5node_to_output_grads_list[node]=[Oneslike((x1+((x2*x3)*x1)))]

6grad=Oneslike((x1+((x2*x3)*x1)))

7input_grads=[Oneslike((x1+((x2*x3)*x1))), Oneslike((x1+((x2*x3)*x1)))]

8

9node.name=((x2*x3)*x1)

10node_to_output_grads_list[node]=[Oneslike((x1+((x2*x3)*x1)))]

11grad=Oneslike((x1+((x2*x3)*x1)))

12input_grads=[(Oneslike((x1+((x2*x3)*x1)))*x1), (Oneslike((x1+((x2*x3)*x1)))*(x2*x3))]

13

14node.name=(x2*x3)

15node_to_output_grads_list[node]=[(Oneslike((x1+((x2*x3)*x1)))*x1)]

16grad=(Oneslike((x1+((x2*x3)*x1)))*x1)

17input_grads=[((Oneslike((x1+((x2*x3)*x1)))*x1)*x3), ((Oneslike((x1+((x2*x3)*x1)))*x1)*x2)]

18

19node.name=x3

20node_to_output_grads_list[node]=[((Oneslike((x1+((x2*x3)*x1)))*x1)*x2)]

21grad=((Oneslike((x1+((x2*x3)*x1)))*x1)*x2)

22input_grads=None

23

24node.name=x2

25node_to_output_grads_list[node]=[((Oneslike((x1+((x2*x3)*x1)))*x1)*x3)]

26grad=((Oneslike((x1+((x2*x3)*x1)))*x1)*x3)

27input_grads=None

28

29node.name=x1

30node_to_output_grads_list[node]=[Oneslike((x1+((x2*x3)*x1))), (Oneslike((x1+((x2*x3)*x1)))*(x2*x3))]

31grad=(Oneslike((x1+((x2*x3)*x1)))+(Oneslike((x1+((x2*x3)*x1)))*(x2*x3)))

32input_grads=None

33

34length of node_to_output_grads_list = {(x1+((x2*x3)*x1)): [Oneslike((x1+((x2*x3)*x1)))], x1: [Oneslike((x1+((x2*x3)*x1))), (Oneslike((x1+((x2*x3)*x1)))*(x2*x3))], ((x2*x3)*x1): [Oneslike((x1+((x2*x3)*x1)))], (x2*x3): [(Oneslike((x1+((x2*x3)*x1)))*x1)], x2: [((Oneslike((x1+((x2*x3)*x1)))*x1)*x3)], x3: [((Oneslike((x1+((x2*x3)*x1)))*x1)*x2)]}

35self.eval_node_list=[(x1+((x2*x3)*x1)), (Oneslike((x1+((x2*x3)*x1)))+(Oneslike((x1+((x2*x3)*x1)))*(x2*x3))), ((Oneslike((x1+((x2*x3)*x1)))*x1)*x3), ((Oneslike((x1+((x2*x3)*x1)))*x1)*x2)]

36

1

2 for node in reverse_topo_order:

3 # print("node.name={} op={}".format(node.name, type(node.op)))

4 grad = sum_node_list(node_to_output_grads_list[node])

5 # print("grad={}".format(grad))

6 input_grads = node.op.gradient(node, grad)

7 # input_grads表示的node的input节点相对node的gradient

8 # print("input_grads={}".format(input_grads))

9 node_to_output_grad[node] = grad

10 for idx, inp in enumerate(node.inputs):

11 node_to_output_grads_list[inp] = node_to_output_grads_list.get(inp, [

12 ])

13 node_to_output_grads_list[inp].append(input_grads[idx])

14